Using the union operation in the wrong place can be costly in Spark. For example, if you read data from a source, process it, then split it into two DataFrames based on certain filter conditions, perform additional operations on each DataFrame separately, and finally union them back together, Spark will reprocess the entire data twice — starting from the initial read.

Let’s take a demo.

There are two tables — Customer and Subscription — which contain the subscription start and end dates. We need to extract the name, and email ID of customers and start/end dates of their plan to downstream for further processing, after applying a 15% discount on yearly payable for only to those whose subscriptions have expired.

Code block for above mentioned ask

The below code deriving the status with current date and applying discount for only whose status is expired.

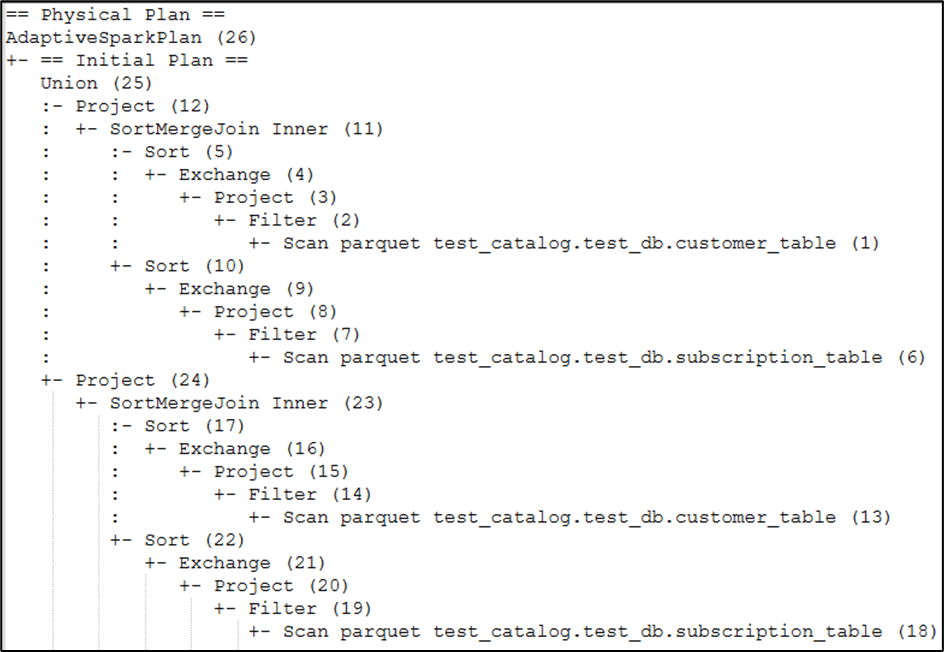

The physical plan below clearly shows that the entire process is executed twice, including the Sort-Merge Join, which involves data shuffling. While this may be acceptable for smaller datasets, it can significantly increase compute costs for larger datasets.

Let’s try with some optimization techniques.

- Adding cache

- Writing into disk & read back

- Changing the way of implementation

Adding cache

- Adding cache explicitly in the code to persist the joined data in memory, spark will read from memory and so not need to read from source again.

The plan below demonstrates adding cache explicitly, allowing the joined data to be retrieved from memory without re-reading or shuffling during joins.

This approach works well as long as there are no memory constraints. However, if the data volume is large and there is insufficient memory to persist it, data spilling may occur, increasing the likelihood of executor failures.

However, there are ways to control data spill, will take this on separately.

Writing into disk & read back

In case of data spill due to memory issue, the data can be written and re-read from the staged location.

The plan below illustrates writing the data to storage and then reading it back. Since there are two actions, the entire process is executed through two separate plans, and the Sort-Merge Join — which causes shuffling — occurs only once.

However, this approach increases I/O operations and may result in longer execution times. It is essentially a trade-off between processing overhead and I/O cost.

Changing the way of implementation

- In some cases, the same logic can be implemented simply by changing the implementation approach. This is possible for this demo, but not universally.

- Therefore, conduct a thorough analysis to determine whether the logic can be expressed using narrow transformations, which avoid shuffles — especially when operating on the same dataset.

The simplest plan, here you go.

Conclusion

Placing union operations incorrectly in Spark can double computation and cause unnecessary shuffling, impacting performance.

By leveraging caching, staging to disk, or rethinking implementation with narrow transformations, we can significantly reduce processing overhead.

The right choice depends on data size, memory availability, and the nature of the transformations involved.

Keep learning !!! Happy Engineering!!!

Leave a comment