In today’s fast-paced and highly volatile financial markets, having access to real-time stock quotes is crucial for making informed and precise decisions. Traditional methods of obtaining stock quotes often involve delays, which can lead to missed opportunities.

This project aims to develop a robust and scalable pipeline that ingests live stock quotes, processes them, and provides actionable insights using AWS, Kafka, DynamoDB, and SNS, delivering real-time stock quotes to analysts and decision-makers.

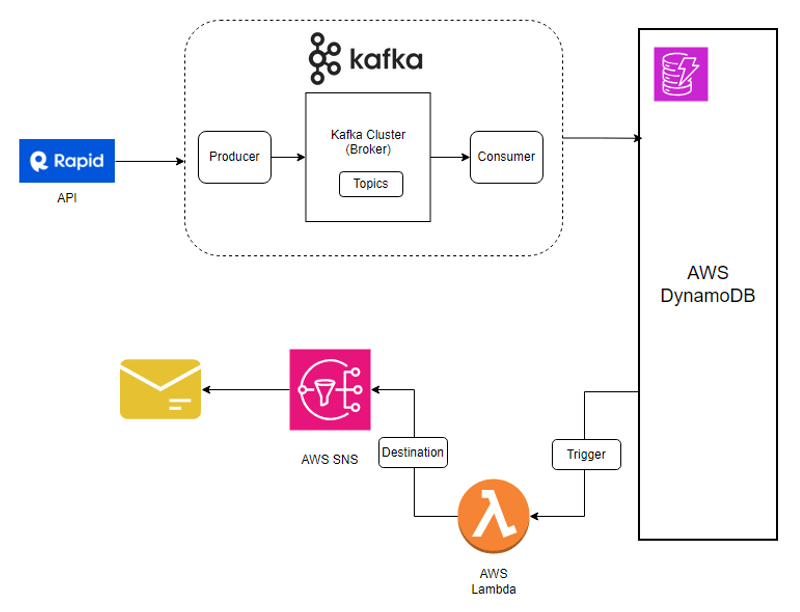

Architecture

It involves two independent workflows designed to handle real-time stock quotes. The first workflow ingests stock quotes from a Rapid API and stores them in a DynamoDB table. The second workflow triggers an AWS Lambda function based on changes in the DynamoDB table, which then sends notifications via AWS SNS to specified email addresses.

Rapid API

It is one of the leading API providers, which provides 1000’s of APIs with free of cost or nominal charging. This project used “latest stock price” API to fetch the stock quotes from the real time market.

Kafka

Kafka is a distributed event store and stream-processing platform designed for real-time data streaming. In this project, Kafka acts as the central component for streaming data.

A Rapid API is configured as the Kafka Producer, responsible for generating and sending data to the Kafka cluster. The Kafka Consumer then retrieves this data from the cluster and processes it, eventually loading it into a database.

Although Kafka is generally deployed in a distributed environment, this project utilizes a local host setup for simplicity and development purposes.

DynamoDB

The No SQL database which is for staging purpose, before sending a notification. DynamoDB is fully managed by AWS and Serverless which is highly scalable and flexible enough to handle more workloads.

Lambda

It is serverless compute services that automatically manages the infrastructure required to run code. Lambda is integrated with the project architecture, to scan the changes in the DynamoDB and send it to SNS.

SNS

Finally, SNS sends the stock quote the subscribed items which is mail id in the project

Data Flow

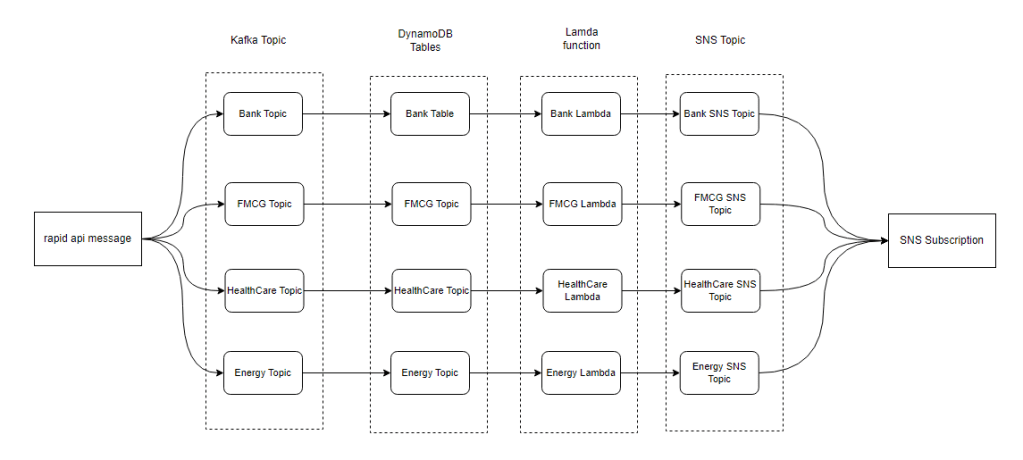

The below diagram explains the data flow from API to sending email notification and the approach of providing channel for each sector were avoiding the cross over.

Code Base – https://github.com/ArulrajGopal/stock_viewer

The code base separated into two parts, one is for Data defining like creating DynamoDB tables, Kafka and SNS topics and lambda functions. Another part is for actual execution, which pulls data from rapid API to SNS subscription.

Data defining part >> src/main/java/ddl

Data Processing part >> src/main/java/pack1

Conclusion

The architecture is designed to handle high-volume, real-time data, offering a scalable and resilient pipeline that provides actionable insights to analysts and decision-makers. The use of a local host setup for Kafka simplifies development while preparing the groundwork for scaling up in a production environment. The code base is organized into data definition and processing components, making it modular and easy to manage.

Overall, the project demonstrates the power of modern cloud technologies and stream-processing platforms in addressing the challenges of real-time financial data analysis.

Leave a comment